In this series of posts, we have been discussing the statistics that are reported on the Item Analysis Report, including the difficulty index, correlational discrimination, and high-low discrimination.

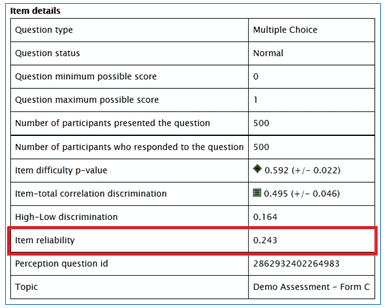

The final statistic reported on the Item Analysis Report is the item reliability. Item reliability is simply the product of the standard deviation of item scores and a correlational discrimination index (Item-Total Correlation Discrimination in the Item Analysis Report). So item reliability reflects how much the item is contributing to total score variance. As with assessment reliability, higher values represent better reliability.

Like the other statistics in the Item Analysis Report, item reliability is used primarily to inform decisions about item retention. Crocker and Algina (Introduction to Classical and Modern Test Theory) describe three ways that test developers might use the item reliability index.

1) Choosing Between Two Items in Form Construction

If two items have similar discrimination values, but one item has a higher standard deviation of item scores, then that item will have higher item reliability and will contribute more to the assessment’s reliability. All else being equal, the test developer might decide to retain the item with higher reliability and save the lower reliability item in the bank as backup.

2) Building a Form with a Required Assessment Reliability Threshold

As Crocker and Algina demonstrate, Cronbach’s Alpha can be calculated as a function of the standard deviations of items’ scores and items’ reliabilities. If the test developer desires a certain minimum for the assessment’s reliability (as measured by Cronbach’s Alpha), they can use these two item statistics to build a form that will yield the desired level of internal consistency.

3) Building a Form with a Required Total Score Variance Threshold

Crocker and Algina explain that the total score variance is equivalent to the square of the sum of item reliability indices, so test developers may continue to add items to a form based on their item reliability values until they meet their desired threshold for total score variance.