In my last post, Understanding Assessment Validity: Criterion Validity, I discussed criterion validity and showed how an organization can go about doing a simple criterion-related validity study with little more than Excel and a smile. In this post I will talk about content validity, what it is and how one can undertake a content-related validity study.

Content validity deals with whether the assessment content and composition are appropriate, given what is being measured. For example, does the test content reflect the knowledge/skills required to do a job or demonstrate that one grasps the course content sufficiently? In the example I discussed in the last post regarding the sales course exam, one would want to ensure that the questions on the exam cover the course content area of focus appropriately, in appropriate ratios. For example, if 40% of the four-day sales course deals with product demo techniques then we would want about 40% of the questions on the exam to measure knowledge/skills in the area of demo skills.

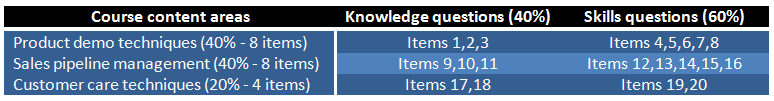

I like to think of content validity in two slices. The first slice of the content validity pie is addressed when an assessment is first being developed: content validity should be one of the primary considerations in assembling the assessment. Developing a “test blueprint” that outlines the relative weightings of content covered in a course and how that maps onto the number of questions in an assessment is a great way to help ensure content validity from the start. Questions are of course classified when they are being authored as fitting into the specific topics and subtopics. Before an assessment is put into production to be administered to actual participants, an independent group of subject matter experts should review the assessment and compare the questions included on the assessment against a blueprint. An example of a test blueprint is provided below for the sales course exam, which has 20 questions in total.

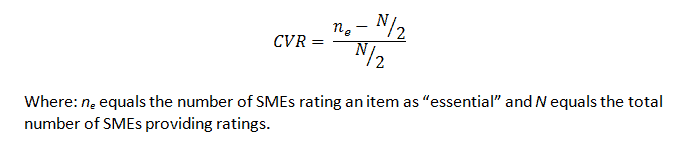

The second slice of content validity is addressed after an assessment has been created. There are a number of methods available in the academic literature outlining how to conduct a content validity study. One way, developed by Lawshe in the mid 1970s, is to get a panel of subject matter experts to rate each question on an assessment in terms of whether the knowledge or skills measured by each question is “essential,” “useful, but not essential,” or “not necessary” to the performance of what is being measured (i.e., the construct). The more SMEs who agree that items are essential, the higher the content validity. Lawshe also developed a funky formula called the “content validity ratio” (CVR) that can be calculated for each question. The average of the CVR across all questions on the assessment can be taken as a measure of the overall content validity of the assessment.

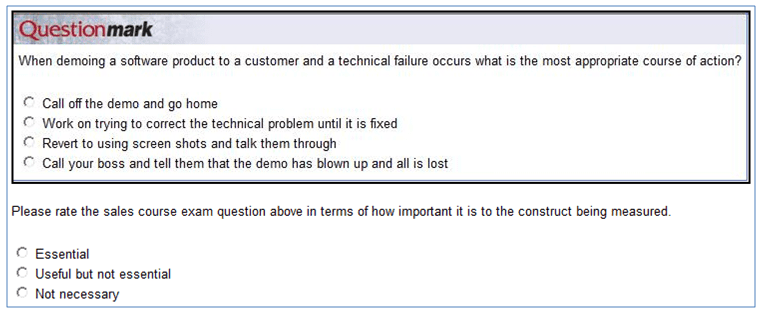

You can use Questionmark Perception to easily conduct a CVR study by taking an image of each question on an assessment (e.g., sales course exam) and creating a survey question for each assessment question to be reviewed by the SME panel, similar to the example below.

You can then use the Questionmark Survey Report or other Questionmark reports to review and present the content validity results.

So how does “face validity” relate to content validity? Well, face validity is more about the subjective perception of what the assessment is trying to measure than about conducting validity studies. For example, if our sales people sat down after the four-day sales course to take the sales course exam and all the questions on the exam were asking about things that didn’t seem related to the information they just learned on the course (e.g., what kind of car they would like to drive or how far they can hit a golf ball), the sales people would not feel that the exam was very “face valid” as it doesn’t appear to measure what it is supposed to measure. Face validity, therefore, has to do with whether an assessment looks valid or feels valid to the participant. However, face validity is somewhat important: if participants or instructors don’t buy in to the assessment being administered, they may not take it seriously, they may complain about and appeal their results more often, and so on.

In my next post I will turn the dial up to 11 and discuss the ins and outs of construct validity.