In previous posts I discussed some of the theory and applications of classical test theory and test score reliability. For my next series of posts, I’d like to explore the exciting realm of validity. I will discuss some of the traditional thinking in the area of validity as well as some new ideas, and I’ll share applied examples of how your organization could undertake validity studies.

According to the “standards bible” of educational and psychological testing, the Standards for Educational and Psychological Testing (AERA/NCME, 1999), validity is defined as “The degree to which evidence and theory support the interpretations of test scores entailed by proposed uses of tests.”

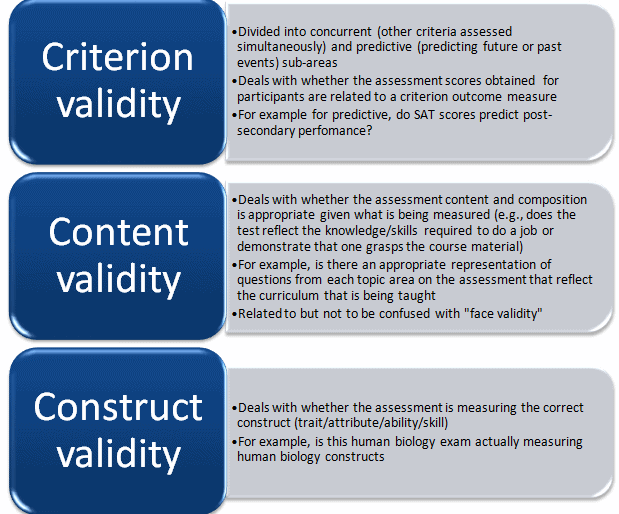

The traditional thinking around validity, familiar to most people, is that there are three main types:

The most recent thinking on validity takes a more unifying approach which I will go into in more detail in upcoming posts.

Now here is something you may have heard before: “In order for an assessment to be valid it must be reliable.” What does this mean? Well, as we learned in previous Questionmark blog posts, test score reliability refers to how consistently an assessment measures the same thing. One of the criteria to make the statement, “Yes this assessment is valid,” is that the assessment must have acceptable test reliability, such as high Cronbach’s Alpha test reliability index values as found in the Questionmark Test Analysis Report and Results Management System (RMS). Other criteria for making the statement, “Yes this assessment is valid,” is to show evidence for criterion related validity, content related validity, and construct related validity.

In my next posts on this topic I will provide some illustrative examples of how organizations may undertake investigating each of these traditionally defined types of validity for their assessment program.