In my last few posts, I spoke about validity. You may recall that our big takeaway was that validity has to do with the inferences we make about assessment results.

With many of our customers conducting criterion-referenced assessments, I’d like to use my next few posts to talk about setting standards that guide inferences about outcomes (e.g., pass and fail).

I’ll start by discussing the Angoff Method – about which Questionmark has some great resources including a recorded webinar on the subject. I encourage you to use this as a reference if you plan on using this method to set standards. Just to summarize, there are five key steps in the Angoff Method:

- Select the raters.

- Take the assessment.

- Rate the items.

- Review the ratings.

- Determine the cut score.

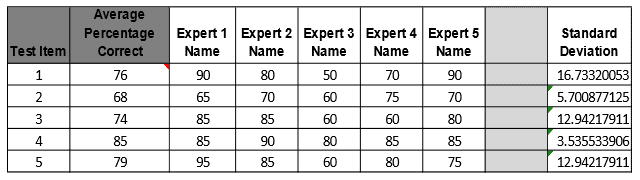

Expert Ratings Spreadsheet Example

Using the Angoff Method to Set Cut Scores (Wheaton & Parry, 2012)

Some psychometricians repeat steps 3-5 in a modified version of this method. In my experience, when raters compare their results, their second rating often regresses to the mean. If the assessment has been field tested, the psychometrician may also use the first round of ratings to tell your raters how many of the participants would have passed based on their recommended cut score (impact data).

Whether or not you choose to do a second round of rating depends on your preference. A second round means that your raters’ results may be biased by the group’s ratings and impact data, but this also serves to reign in outliers that may skew the group’s recommended cut score. This latter problem can also be mitigated by having a large number of representative raters.

As part of step 3, psychometricians train raters to rate items, and the toughest part is defining a minimally qualified participant. I find that raters often make the mistake of discussing what a minimally qualified participant should be able to do rather than what they can do. Taking the time to nail down this definition will help to calibrate the group and temper that one overzealous rater who insists that participants get 100% of items correct to pass the assessment.

The definition of a minimally qualified participant depends on the observable variables you are measuring to make inferences about the construct. If your assessment has a blueprint, your psychometrician may guide the raters in a discussion of what a minimally qualified participant is able to do in each content area.

For example, if the assessment has a content area about ingredients in a peanut butter sandwich, there may be a brief discussion to confirm that a minimally qualified participant knows how to unscrew the lid of the peanut butter jar.

This example is silly (and delicious), but this level of detail is valuable when two of your raters disagree about what a minimally qualified participant is able to do. Resolving these disagreements before rating items helps to ensure that differences in ratings are a result of raters’ opinions and not artifacts of misunderstandings about what it means to be minimally qualified.