I recently was asked a common question regarding creating assessments: How many questions are needed on an assessment in order to obtain valid and reliable participant scores? The answer to this question depends on the context/purpose of the assessment and how scores are used. For example, if an organization is administering a low-stakes quiz designed to facilitate learning during study with on-the-spot question-level feedback and no summary scores, then one question would be enough (although probably more would be better to achieve the intended purpose). If no summary scores are calculated (e.g., an overall assessment score), or if these overall scores are not used for anything, then very small numbers of questions are fine. However, if an organization is administering an end-of-course exam that a participant has to pass in order to complete a course, the volume of questions on that exam is important. (A few questions aren’t going to cut it!) The issue in terms of psychometrics is whether very few questions would provide enough measurement information to allow someone to draw conclusions from the score obtained (e.g., does this participant know enough to be considered proficient).

Ever wonder why you have to take so many questions on a certification or licensing exam? One rarely gets to take only 2-3 questions on a driving test, and certainly not a chartered accountant licensing exam. Oftentimes one might take close to 100 questions on such exams. One of the reasons for this is because more individual measurements of what a participant knows and can do need to be provided in order to ensure that the reliability of the scores obtained are high (and therefore that the error is low). Individual measurements are questions, and if we only asked one question to a participant on an accounting licensing exam we likely would not get a reliable estimate regarding the participant’s accounting knowledge and skills. Reliability is required for an assessment score to be considered valid, and generally the more questions on an assessment (to a practical limit), the higher the reliability.

Generally, what an organization would do is have a target reliability value in mind that would help determine how many questions one would need at a minimum in order to have the measurement accuracy required in a given context. For example, in a high-stakes testing program where people are being certified or licensed based on their assessment scores a reliability of 0.9 or higher (the closer to 1 the better) would likely be required. Once a target minimum reliability target is established one can estimate how many items might be required in order to achieve this reliability.

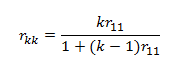

An organization could administer a pilot beta test of an assessment and run the Test Analysis Report to obtain the Cronbach’s Alpha test reliability coefficient. One could then use the Spearman-Brown prophecy formula (described further in “Psychometric Theory” by Nunnally & Bernstein, 1994) to estimate how much the internal consistency reliability will be increased if the number of questions on the assessment increases:

Where:

- k=the increase in length of the assessment (e.g., k=3 would mean the assessment is 3x longer)

- r11=the existing internal consistency reliability of the assessment

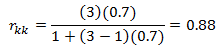

For example, if the Cronbach’s Alpha reliability coefficient of a 20-item exam is 0.70 and 40 items are added to the assessment (increasing the length of the test by 3x), the estimated reliability of the new 60-item exam will be 0.88:

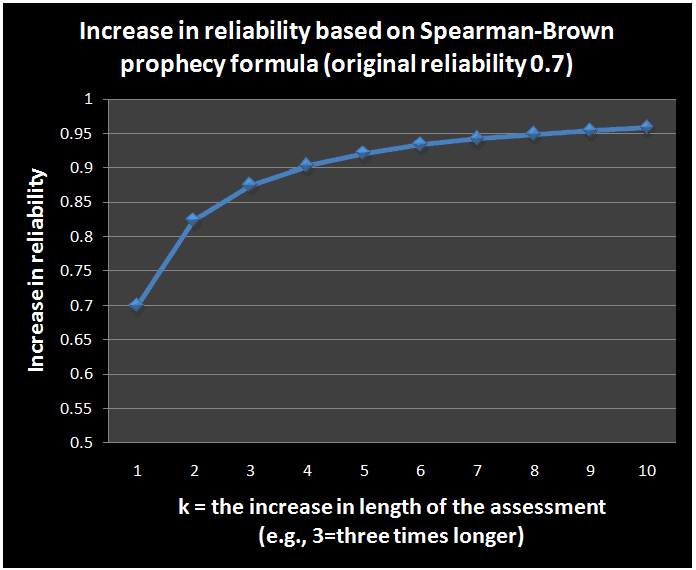

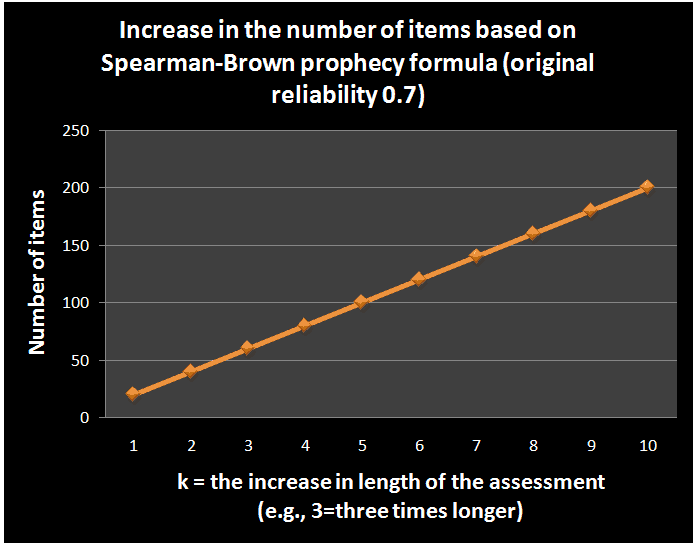

Let’s look at this information visually:

If you would like to learn more about validity and reliability, see our white papers.

I hope this helps to shed light on this burning psychometric issue!

Contact us for more information.