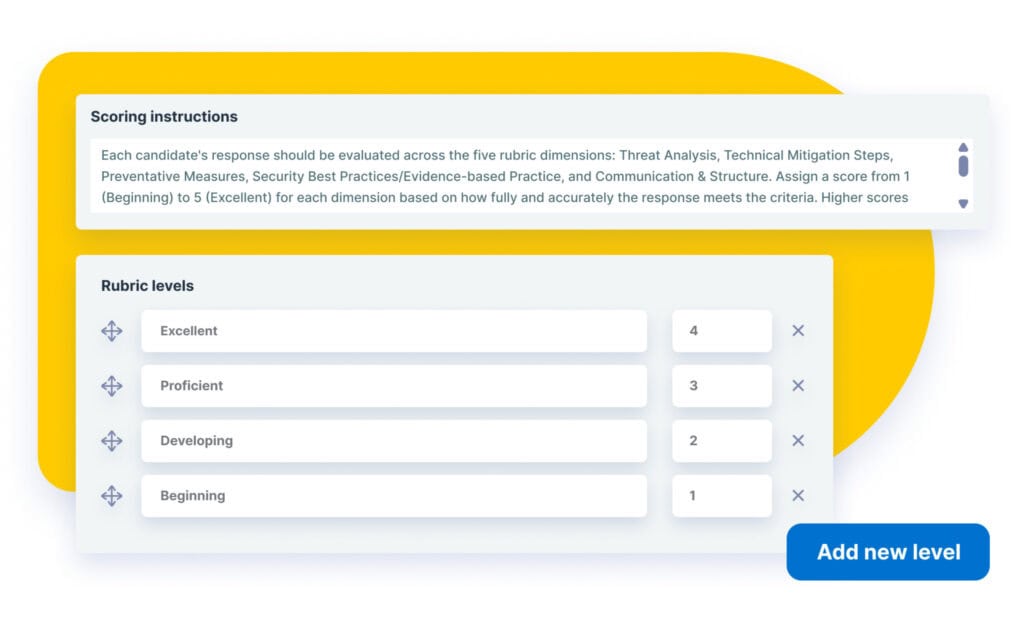

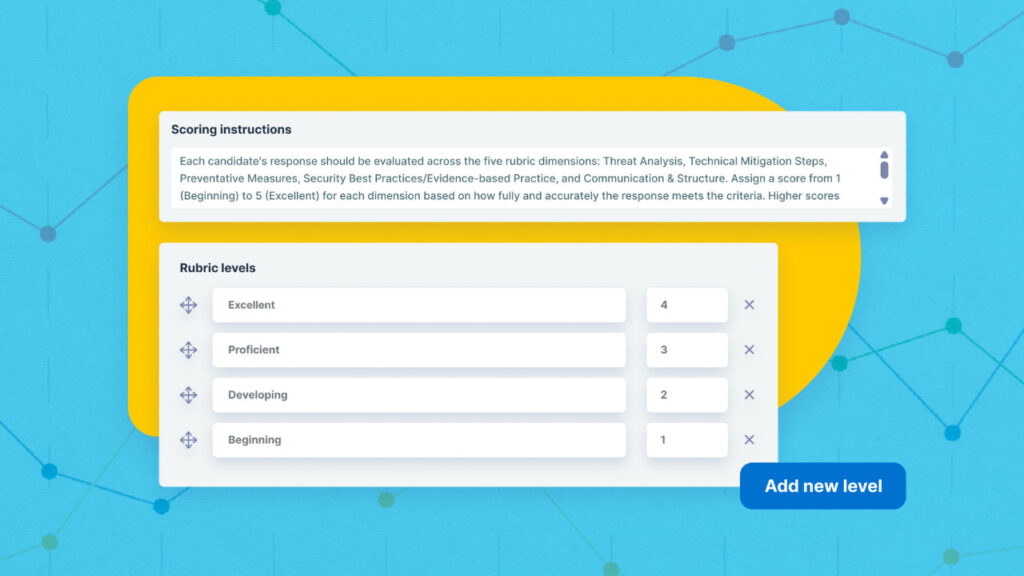

The rubric editor lets you create and manage analytic rubrics, which define how responses are scored across multiple dimensions using a consistent scale. You can add rubric levels such as Very Good, Good, Poor, and Incomplete, and define the criteria for each level. Each dimension in the rubric automatically uses these levels, ensuring consistent scoring.

To get started, create or edit an essay question in the Standard Item Bank and set the Scoring style to Analytic rubric. A scoring instructions field, rubric levels, and dimensions will appear in the question editor. You can add new levels or dimensions as needed, and edit descriptions and scores to match your program’s requirements. The maximum score for a question is calculated automatically based on the highest rubric level and the number of dimensions.

Once your rubric is set up, it integrates with the Scoring Tool. Graders can use the rubric to review responses, apply consistent scoring, and, if AI Scoring is enabled, receive AI-generated suggestions that they can adjust before submitting final scores. This workflow saves time, ensures accuracy, and keeps graders in control.

To get started, create or edit an Essay or File Upload question in the Standard Item Bank and set the Scoring style to Analytic rubric. A scoring instructions field, rubric levels, and dimensions will appear in the question editor. You can add new levels or dimensions as needed, and edit descriptions and scores to match your program’s requirements. The maximum score for a question is calculated automatically based on the highest rubric level and the number of dimensions.

Once your rubric is set up, it integrates with the Scoring Tool. Graders can use the rubric to review responses, apply consistent scoring, and, if AI Scoring is enabled, receive AI-generated suggestions that they can adjust before submitting final scores. This workflow saves time, ensures accuracy, and keeps graders in control.