Following up from my posts last week on reliability I thought I would finish up on this theme by explaining the internal consistency reliability measure: Cronbach’s Alpha.

Cronbach’s Alpha produces the same results as the Kuder-Richardson Formula 20 (KR-20) internal consistency reliability for dichotomously scored questions (right/wrong, 1/0), but Cronbach’s Alpha also allows for the analysis of polytomously scored questions (partial credit, 0 to 5). This is why Questionmark products (e.g., Test Analysis Report, RMS) use Cronbach’s Alpha rather than KR-20.

People sometimes ask me about KR-21. This is a quick and dirty reliability estimate formula that almost always produces lower values than KR-20. KR-21 assumes that all questions have equal difficulty (p-value) to make hand calculations easier. This assumption of all questions having the same difficulty is usually not very close to reality where questions on an assessment generally have a range of difficulty. This is why few people in the industry use KR-21 over KR-20 or Cronbach’s Alpha.

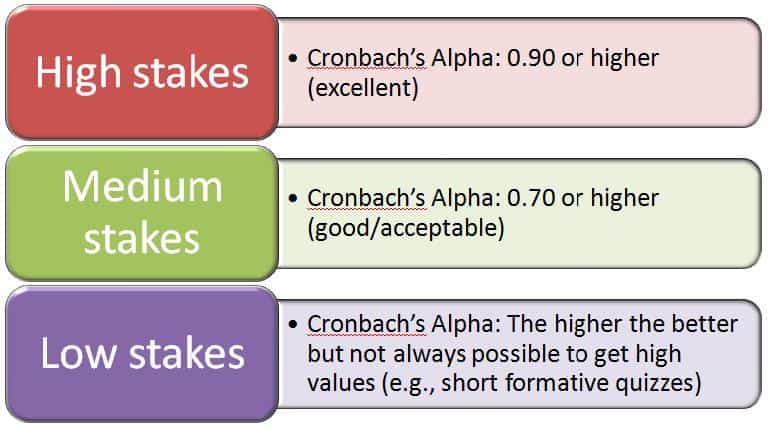

My colleagues and I generally recommend that Cronbach’s Alpha values of 0.90 or greater are excellent and acceptable for high-stakes tests, while values of 0.7 to 0.90 are considered to be acceptable/good and appropriate for medium-stakes tests. Generally values below 0.5 are considered unacceptable. With this said, in low stakes testing situations it may not be possible to obtain high internal consistency reliability coefficient values. In this context one might be better off evaluating the performance of an assessment on an item-by-item basis rather than focusing on the overall assessment reliability value.